Machine learning vocabulary

Kurt McIntire

04.10.2020

Here's a list of terminology that I encountered during my machine learning studies. I'll push updates to this blog post as a living document.

Basics

Model

- A function or algorithm.

- Example. I can use a model to predict the value of a home, given information about that home (number of bedrooms, neighborhood, or an image of the home's exterior).

Supervised learning

- Training a model with data that is labeled. Then, using that model to predict a value, within one of the labelled categories.

- Example. Training a model with home sale information, which includes bedroom count, neighborhood, and price. Then, using this model to predict the price of a home, given bedroom and neighborhood.

Unsupervised learning

- Training a model with data that is labeled. Then, using that model to analyze trends within the dataset.

- Example. Training a model with home sale information, which includes bedroom, neighborhood count, neighborhood, and price. Then, using this model to identify homes that sold for unusual amounts or using this model to identify that a certain neighborhood typically has three bedrooms.

Validation set

- The data used to verify that a model is accurate.

- Example. Training a model to predict home price, given neighborhood and bedroom count. The validation set would be a dataset of home prices, neighborhoods, and bedroom counts that the model did not use for training. This validation set can be used to test and verify that the model is accurate.

Training set

- The data used to train a model.

- Example. Training a model to predict home price, given neighborhood and bedroom count. The training set would be a dataset of home prices, neighborhoods, and bedroom counts within a timespan. This training set will be input for the model.

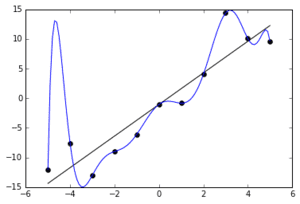

Overfitting

- Training a model so that its weights perfectly match that of the training set, but fail to match that of a validation set.

- Example. Training a model to predict home price, given neighborhood and bedroom count within the training data set. However, the model is overfitted if it cannot accurately predict home price, given neighborhood and bedroom count within a validation data set.

Overfitting. The black, straight line indicates an accurate, useful fit given the dataset. The blue, squiggly line represents overfitting. Source: Wikipedia

Weights

- Values of a model that set the importance of each parameter within a labeled dataset.

- Example. Training a model with home sale information, which includes bedroom count, neighborhood, and price. A machine learning architecture will use mathematical operations to calculate the importance of neighborhood and bedroom count when determining price.

Batch gradient decent

- The mathematical process in which weights are determined for a model. This makes use of Calculus and derivatives to optimize a set of weights for minimum error against the training dataset.

Neural network

- A mathematical approach to generate machine learning models using an iterative, multi-node approach. Stateless.

- Example. Training a model to predict home price, given neighborhood and bedroom count. A neural network could be used to generate the model by optimizing the model with different weighted values for each input parameter.

Convolutional neural network

- A mathematical approach to generate visual machine learning models, by slicing images into sub-images, then running each sub-image through its own neural network.

- Example. Training a model to predict home price, given a photo of a house. A convolutional neural network will, during training, slice each training image into sub-images. Then, sub-neural networks will learn from the sub-images.

Recurrent neural network

- A stateful mathematical approach to generate models. Generally useful for natural language processing. - Example. Training a model to predict the next word in a sentence, given the previous word or words. Stateless models do not have knowledge of previous inputs. recurrent neural networks use previous inputs to inform its learning.

Max pooling

- A mathematical approach to downsample images during the machine learning process, making learning faster and less computationally intensive.

- Example. Training a model to predict home price, given a photo of a house. Max pooling occurs within a convolutional neural network training process to downsample a convolutional (sub-image) to find its most interesting parts. Max pooling will pick the most mathematically interesting parts of the input house sub-images.

Categorical variables

- A variable that is made up of a finite number of unique values.

- Example. Retriever Breeds:

Lab,Golden,Flat-coated,Curly-coated.

Continuous variables

- A variable that is made up of an infinite number of values.

- Example. Age: 0-infinity.

Tensors

Scalar

- A single number, also a zero dimensional tensor.

- Example.

42

Vector

- An array, also a one dimensional tensor.

- Example.

[42, 15, 100, 18]

Matrix

- An array of vectors, also a two dimensional tensor.

- Example.

[

[42, 15, 2],

[32, 16, 72],

[18, 100, 86],

]

3D Tensor

- An array of matrices, also a three dimensional tensor.

- Example.

[

[

[42, 15, 2],

[32, 16, 72],

[18, 100, 86],

]

[

[52, 15, 2],

[42, 16, 72],

[28, 100, 86],

]

[

[62, 15, 2],

[52, 16, 72],

[38, 100, 86],

]

]

Classification types

Binary classification

- A method of classification in which the predicted value is a binary true or false.

- Example. Training a model to identify email spam, given an input email. A binary classification will predict that an input email is spam true, or spam false.

Multiclass classification

- A method of classification in which the predicted value is a single class, from an array of input classes.

- Example. Training a model to predict single home type, given a photo of a house. A multiclass classification will predict a single class of home type, like Craftsman or Cape-Cod. It will not return an array of possible home types.

Multi-label classification

- A method of classification in which the predicted value is an array of classes.

- Example. Training a model to predict classes given a photo of a house. A multi-label classification will predict an array of classes for the home, like "Craftsman, yard, sky, blue, shingle roof".

Results

True positive

- Outputs of a model that correctly match against the validation set and are "true".

- Example, a model trained to identify if a house is of architecture style "Craftsman". A true positive happens when the model thinks that an input image is a "Craftsman", and the image is of type "Craftsman".

True negative

- Outputs of a model that correctly match against the validation set and are "false".

- Example, a model trained to identify if a house is of architecture style "Craftsman". A true negative happens when the model thinks that an input image is not a "Craftsman", and the image is not of type "Craftsman".

False positive

- Outputs of a model that incorrectly match against the validation set and are "false".

- Example, a model trained to identify if a house is of architecture style "Craftsman". A false positive happens when the model thinks that an input image is a "Craftsman", but the image was not of type "Craftsman".

False negative

- Outputs of a model that incorrectly match against the validation set and are "false".

- Example, a model trained to identify if a house is of architecture style "Craftsman". A false negative happens when the model thinks that an input image is not a "Craftsman", but the image was of type "Craftsman".

Natural Language Processing

Target corpus.

- Inputs in which you train your NLP model with Transfer Learning.

- Example. Documents, tweets, or medical reports.

Tokenization

- Process in which training text is turned into a normalized array of words. Typically, characters like punctuation and unknown words are removed.

Numericalization

- Process in which training text is turned into an array of numbers referring to their index within the tokenization array.